Imagine asking your LLM for today’s date, only to receive an outdated response.

This limitation is due to the model’s training cut-off.

By incorporating web search, your model can fetch real-time data, addressing events and details outside of its static training set.

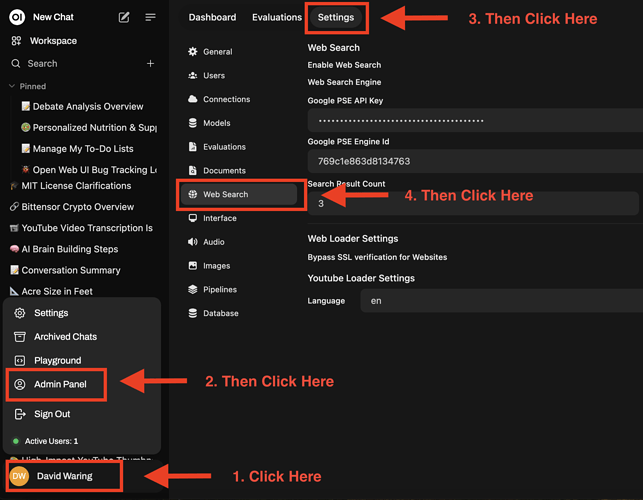

Configuring Web Search in Open Web UI

To enable your LLM to access the web, follow the steps below:

- Access Settings : Head to your admin panel and navigate to the settings tab.

-

Select Web Search Option : Choose your preferred search engine. We’ve found Google has the best results, and it’s free to use, so that’s what we’ll be showing you how to setup in this lesson. However, the process should be similar for other search options.

-

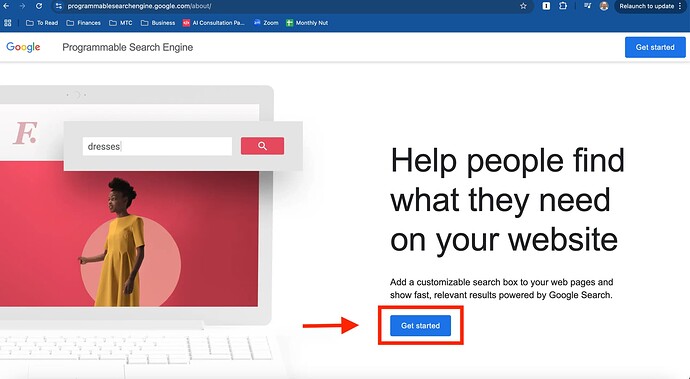

Create Your Search Engine - Head to Google’s Programmable Search Engine and click “get started”.

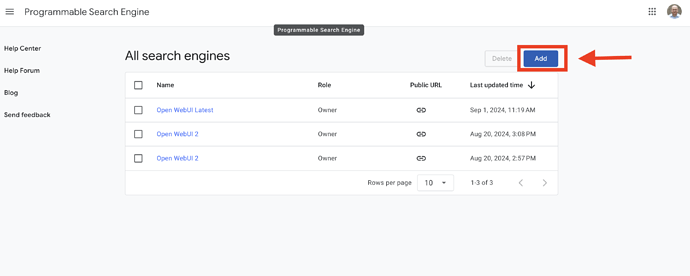

Click “Add”

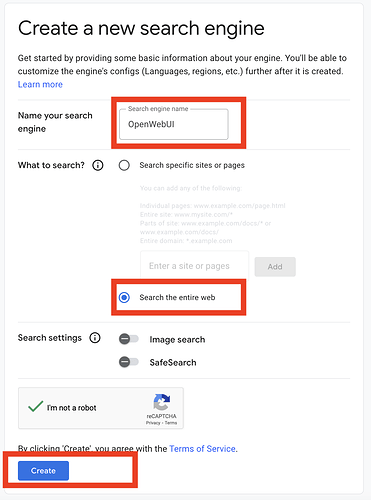

Setup your search engine by naming it and selecting “search the entire web” and then clicking “create”.

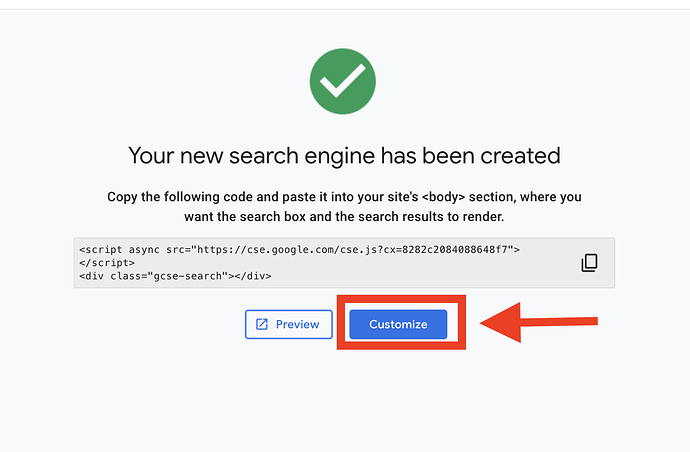

Then click “customize”

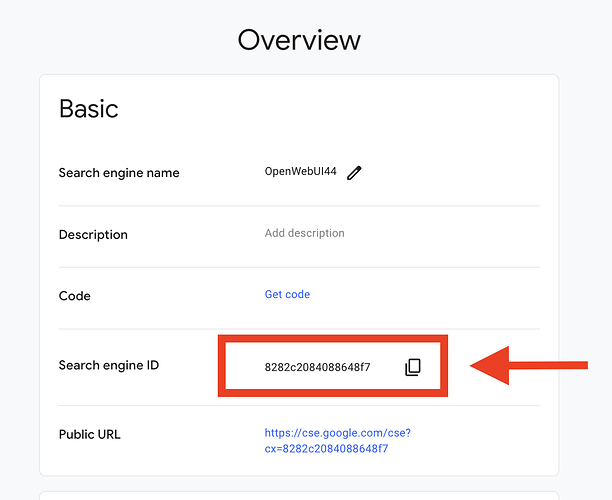

Grab the Search Engine Id:

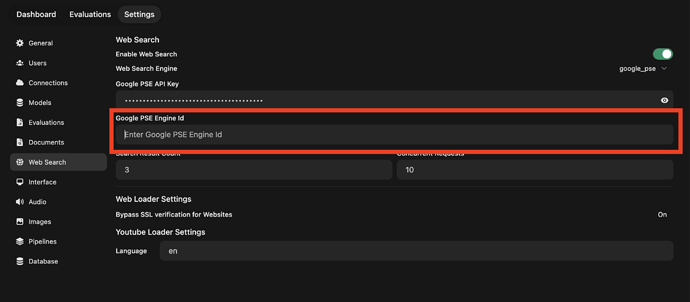

And paste it into your Open WebUI:

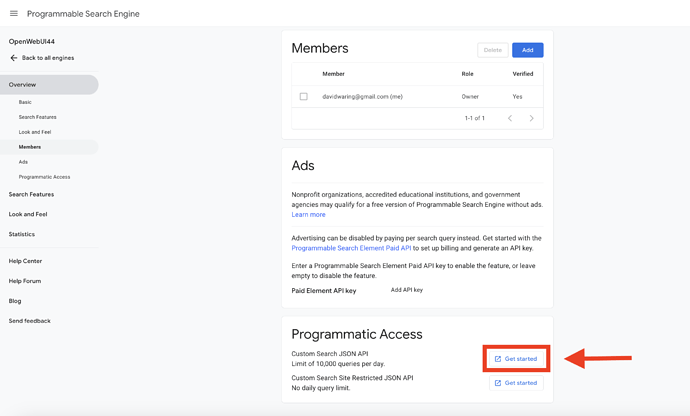

Grab the API key by first Scrolling down on the same page and Click “Get Started” by the JSON API.

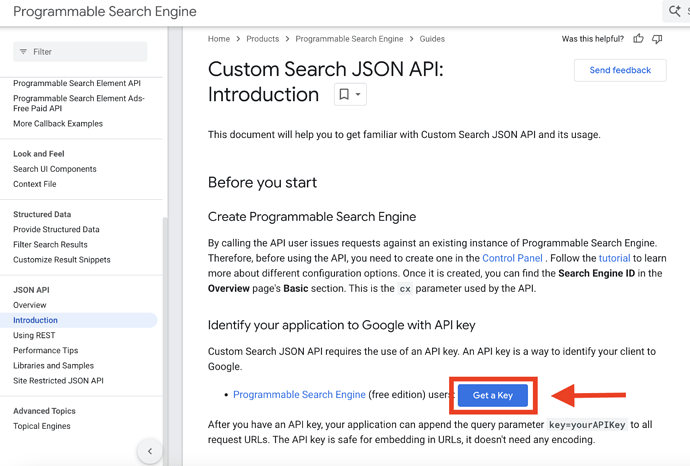

Then click get a key, name and create your API key, and then click the copy button.

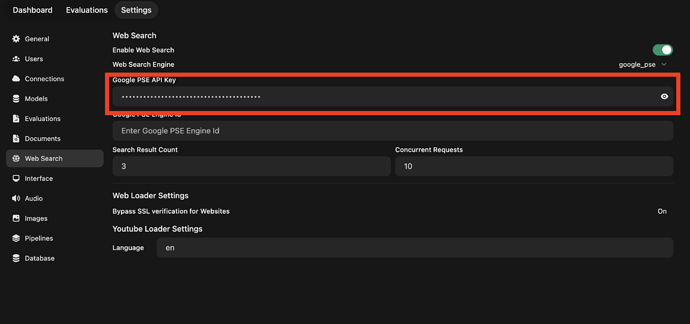

Then paste the API key into your Open WebUI:

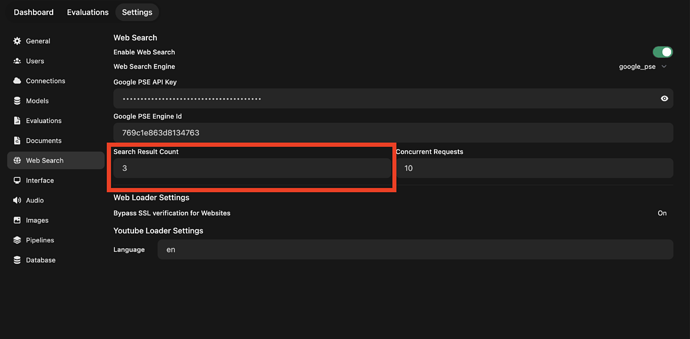

- Set the Search Scope : Determine the number of sites to query. More sites mean more comprehensive results, albeit with longer response times. We normally leave the default of 3 for this reason.

Executing Web Searches for Real-Time Information

Once your settings are configured, it’s time to see them in action!

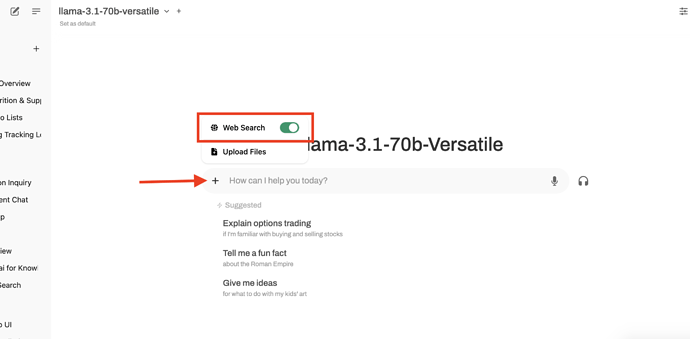

- Model Selection and Web Search Activation : Choose your desired model (e.g., Llama 3.2) and ensure web search is enabled under “more” settings.

- Pose Your Query : For instance, ask, “What is the date today?” The model checks online sources to provide the current date, e.g., October 16, 2024.

Next Steps

Continue to our next lesson on how you can use Open AI models like GPT4o within Open WebUI.