Hey Eric,

It looks like it’s straightforward to get docker up and running but a little more involved to get ollama up and running at startup.

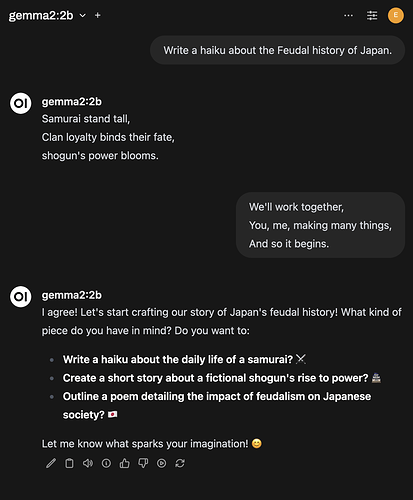

I asked my Open WebUi digital brain how to do it and this is what I got back.

Let me know what you think!

Thanks

Dave

To ensure that Ollama and Docker run automatically at startup on your Mac, you’ll generally need to set them up as login items or use startup scripts. Below is a step-by-step guide to achieve this:

For Docker (via GUI):

Docker Desktop for Mac comes with an easy “auto-start” setting that you can enable via the user interface.

-

Open Docker Desktop:

- Go to your Applications folder and open Docker.

-

Enable Auto Startup:

- Click on the Docker icon in the Mac’s menu bar (top-right corner).

- Choose Preferences or Settings.

- In the menu, navigate to General settings and check the box labeled Start Docker Desktop when you log in.

This ensures Docker Desktop starts automatically when your Mac boots up.

For Ollama (via Terminal):

Ollama requires a CLI-based approach to automatically run at startup. You can achieve this by creating a launch daemon or using a launchd plist file.

Step 1: Create the Launch Daemon File

You’ll need to create a property list (plist) file that defines how Ollama should be run at startup.

- Open the

Terminal app.

- Use a command-line text editor (like

nano) to create a com.ollama.startup.plist file in the ~/Library/LaunchAgents directory:

nano ~/Library/LaunchAgents/com.ollama.startup.plist

- In the nano editor, enter the following content into the plist file. Replace the

/path/to/ollama with the actual path where Ollama is installed (update the path if needed or use its command as necessary):

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>Label</key>

<string>com.ollama.startup</string>

<key>ProgramArguments</key>

<array>

<string>/path/to/ollama</string>

<string>serve</string> <!-- Or the necessary command to start Olive. -->

</array>

<key>RunAtLoad</key>

<true/>

</dict>

</plist>

- Once done, press

CTRL + O to save the file, then CTRL + X to exit.

Step 2: Load the Launch Agent

Now, load the created plist file to make it active:

launchctl load ~/Library/LaunchAgents/com.ollama.startup.plist

This will ensure that the Ollama process starts immediately and also when your Mac starts up.

Checking if Everything Works

- Restart your Mac and confirm that Docker automatically starts (you can check this by looking at the menu bar for the Docker icon).

- Confirm that Ollama is running by checking for your processes in the Terminal:

ps aux | grep ollama

Managing Startup Items (Optional)

You can add items manually in System Settings under Users & Groups if needed, but for command-line utilities like Ollama, using launchd is preferred.

You should now have both Docker and Ollama set up to start automatically when you log into macOS.